An improved routing algorithm for wireless sensor networks based on LEACH

Routing algorithm is one of the core technologies of wireless sensor network research. Based on the LEACH algorithm, a two-layer LEACH algorithm DE-LEACH based on the selection of the second layer cluster head based on distance and energy is proposed, which effectively avoids the direct communication between the low energy nodes and the nodes far away from the base station and improves Network survival time and data collection capabilities. The event-driven approach reduces the amount of data sent, further extending the network's lifetime.

Keywords: routing algorithm; second-level cluster head selection; network survival time; data collection capability; data fusion; event-driven

0 Introduction The continuous development of microelectronics technology, computer technology and wireless communication technology makes it possible to design low-power multi-function wireless sensors. The wireless sensor network deploys a large number of inexpensive low-power sensor nodes in the monitoring area. Its purpose is to cooperatively sense, collect, and process the information of the sensing objects in the network coverage area, and send it to the multi-hop self-organizing network in a wireless communication manner. Remote base station (BS). Wireless sensor network is one of the three global high-tech industries in the future. It has broad application prospects and has huge application value in many fields such as military defense, disaster warning, environmental monitoring, and medical treatment.

As one of the core technologies of wireless sensor networks, the performance of routing protocols largely determines the overall performance of the network. Therefore, routing algorithms have always been a hotspot in wireless sensor network research. According to the similarities and differences of the functions of each node in the network, the routing algorithm can be divided into flat and hierarchical routing. Due to the poor scalability of flat routing, and the maintenance of dynamically changing routing requires a lot of control information, if it is deployed in areas where humans are more difficult to reach, maintenance is relatively difficult. The hierarchical routing algorithm overcomes the above deficiencies, has good scalability, and has relatively little control information in the network, and has been widely used. LEACH is the first hierarchical routing protocol based on a multi-cluster structure, and its clustering idea has been used in many subsequent protocols, such as TEEN.

In the LEACH algorithm, each node autonomously decides whether to act as a cluster head in this round according to the cluster head probability p. Once it becomes a cluster head, it informs the entire network through broadcast. Since the cluster head selection is completely autonomous and random, no communication coordination between nodes is required. Therefore, the LEACH algorithm has the characteristics of energy saving, simple implementation, and good scalability, and is easy to deploy in areas where people are difficult to reach. However, random cluster head selection cannot guarantee the number and distribution of cluster head nodes per round, which is easy to cause uneven energy loss of nodes in the network, and the node lifetime is widely dispersed. By the end of the network lifetime, monitoring blind spots will be formed, affecting the overall network performance.

In this paper, a two-layer cluster head algorithm DE-LEACH (Distance-Energy LEACH) is proposed on the basis of LEACH. The first layer cluster head election mechanism is the same as LEACH, and then from the selected cluster head according to the remaining energy The compromise between the distance to the base station (BS) considers the selection of the second layer cluster head. The data collected by the first-layer cluster head is sent to the second-layer cluster head after fusion processing, and then the second-layer cluster head completes the second fusion and forwards the monitoring data to the base station. Because the second-layer cluster head election mechanism comprehensively considers the remaining energy of the node and its distance to the base station, direct communication between the first-layer cluster head and the base station that have less residual energy and are far away from the base station is avoided. The simulation results show that DE-LEACH prolongs the death time of the first node of the network, shortens the time interval from the death of the first node to the death of the last node, and the total data collection capacity of the network is significantly improved.

1 Introduction to the LEACH algorithm The LEACH algorithm introduces the concept of rounds. Each round of cluster head nodes rotates to achieve the purpose of dispersing the energy consumption of each node. Each round includes two stages: cluster establishment and stable operation. To reduce management consumption, the stable operation phase takes longer than the cluster establishment phase.

In the cluster establishment phase, each node autonomously decides whether to serve as the cluster head in the current round. The specific election method is: each node autonomously generates a random number between 0 and 1, if this random number is less than a certain threshold T (n), Then this node acts as a cluster head in the current round. The randomness generated by this cluster head ensures that the higher communication cost between the cluster head and the base station is shared among the nodes in the network.

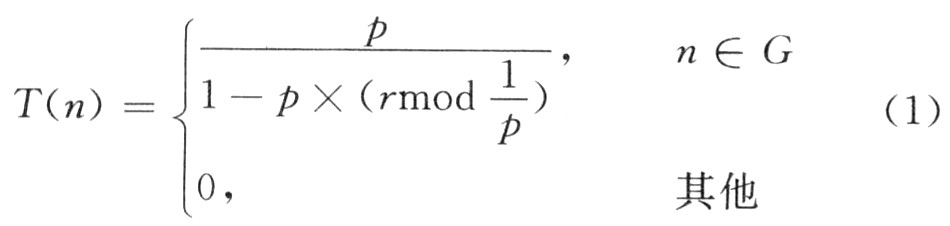

The threshold T (n) is defined as:

Among them: p is the percentage of cluster head nodes in the total number of nodes in the network; r is the number of completed rounds; G is the set of nodes that have not served as cluster head nodes in the first 1 / p round. For example, in the first round (r = 0), the probability of each node becoming a cluster head is p, and the cluster head of the first round no longer acts as a cluster head node in the 1 / p round after including this round ( (1st to 20th round). After that, since the number of nodes that can serve as cluster head nodes decreases, the probability that each node becomes a cluster head must be increased to ensure the number of cluster heads per round. After the round of 1 / p-1, the probability that a node that has not been a cluster head acts as a cluster head is 1, and all can become cluster head nodes. After round 1 / p, all nodes can independently decide whether to act as cluster head nodes. In the literature, the author demonstrates that the optimal cluster head node percentage is p = 5%.

Once the cluster head is selected, the cluster head node will use the CSMA MAC protocol to send broadcast packets to all nodes in the entire network, which contains the information that the node becomes a cluster head. According to the symmetry principle of the network, other nodes choose the cluster head that receives the strongest signal to join, and the cluster establishment phase is completed.

In the stable operation stage, ordinary nodes use the CSMA MAC protocol to send joining packets to their cluster heads. After the cluster head node receives the joining data packet, it will generate a TDMA timetable, allocate transmission slots for all nodes in the cluster, and broadcast this timetable to each member. After that, the cluster head node starts to receive the data collected by each member and sends it to the base station after fusion. The cluster head node keeps the receiver always on at this stage to receive data, while ordinary nodes only turn on the transmitter when they send it, and turn off the transmitter at other times to save energy.

Compared with the planar routing algorithm, the LEACH algorithm significantly reduces energy consumption and spreads the energy dissipation to the entire network, effectively prolonging the network survival time. In the literature, the author's simulation shows that the LEACH network survival time is about 6 times higher than the planar Direct communicaTIon protocol network, and about 10 times higher than the hierarchical fixed cluster head protocol StaTIcClusters network lifetime.

However, completely autonomous random cluster head selection cannot guarantee the number and distribution of cluster head nodes per round. There is a possibility that nodes farther from the base station and with less energy will act as cluster heads, resulting in uneven energy loss of nodes in the network. The life span is relatively large, and a blind spot will be formed by the end of the network life span, which affects the overall performance of the network. In order to improve this situation, this paper proposes a two-layer LEACH algorithm DE-LEACH that selects the second layer cluster head based on distance and energy.

2 Two-layer LEACH algorithm DE-LEACH based on distance energy selection

Like the LEACH algorithm, the DE-LEACH algorithm is divided into a cluster establishment phase and a stable operation phase.

In the cluster establishment phase, first, each node still uses its own random number to decide whether to become a cluster head and notify all nodes in the network, which will not be repeated here. The difference is that the selected cluster head node adds its remaining energy and the distance to the base station to the broadcast data packet for broadcasting. After that, the second-layer cluster head is selected from the selected first-layer cluster head according to its remaining energy and the distance relationship parameter Th to the base station.

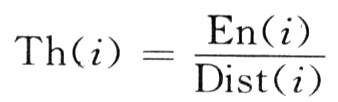

Th is defined as:

Where i is the node number in the network, En (i) is the remaining energy of the i-node, and Dist (i) is the distance from the i-node to the base station.

The specific strategy for selecting the second-layer cluster head is: the cluster head j compares its Th (i) value with the received and calculated Th values ​​of other cluster heads, and if it is the largest, it becomes the second-layer cluster head; If the Th (i) value of the i-node of the cluster head is found to be the largest in the comparison, it is considered that i is the cluster head of the second layer. Note here:

(1) The second layer cluster head also completes the work of the first layer cluster head broadcast, time slot allocation, data collection and fusion;

(2) After calculating and comparing the Th value, each cluster head node can already confirm which first layer cluster head node is also responsible for the second layer cluster head node function, so the second layer cluster head node does not need to broadcast its own identity ; And because each cluster head node has received other cluster head node numbers, data can be transmitted in the order of the number, so the second layer cluster head node does not need to allocate time slots for the first layer cluster head node to broadcast; this saves Went to the broadcast overhead;

(3) Each ordinary node does not need to know who is the second-level cluster head, they only communicate with the first-level cluster head, and the second-level cluster head also assumes the function of the first-level cluster head.

In the stable operation phase, the communication method between the ordinary node and the first layer cluster head is the same as LEACH. However, after the data collection and fusion work is completed, the data packet is not directly sent to the base station, but is divided into time slots from the first layer of cluster heads to the second layer of cluster head nodes according to the order of cluster head node numbers. After the second-layer cluster head node performs secondary fusion, it is sent to the base station.

The LEACH algorithm assumes that the base station is far away from the monitoring area. If the first-layer cluster head nodes all communicate directly with the base station, the communication energy consumption is large, and it is easy to cause a large gap in the remaining energy of each node in the network, causing the first and last nodes to die. The longer time interval leads to blind spots in monitoring. The DE-LEACH algorithm can effectively postpone the death time of the first node, reduce the time interval between the death of the first node and the last node, and shorten the time of blind spots. In this way, it is undoubtedly more efficient in terms of economy and control after all nodes are collectively killed.

In addition, considering that the data collected by the sensor nodes are relevant in a relatively short period of time, an event-driven approach can be used to further extend the lifetime of the network. The following will compare the performance of DE-LEACH and LEACH, and simulate and analyze the improvement degree of DE-LEACH's survival period after adding the event driving factors.

3 Simulation analysis

3.1 Performance comparison between DE-LEACH and LEACH The Matlab tool is used to simulate and compare the LEACH algorithm and the DE-LEACH algorithm. The main comparisons are:

(1) The curve of the number of surviving nodes with rounds;

(2) The curve of the total number of data acquisition bits (before the second fusion) with the round.

In the simulation, it is assumed that the wireless sensor network is composed of 100 identical wireless sensor nodes, randomly scattered in the area of ​​100 m × 100 m, and the remote base station is located at the coordinate point (x = 0, y = -100). The initial energy of each node is O. 5 J, the loss of the sending and receiving circuits is Elec = 50 nJ / b, and the data fusion consumption is Eda = 5 nJ / b. The amplification factor is Efs = 10 pJ ï¼ b ï¼ m2 (d ![]() The data packet length is 2 000 b, the broadcast packet length is 200 b, and the total bandwidth is 1 MHz. In order to simplify the simulation complexity, the communication in the monitoring area takes Efs = 10 pJ / b / m2 according to the free space model, and the communication between the cluster head node and the base station is due to the long distance (> 100 m). .001 3 pJ / b / m4.

The data packet length is 2 000 b, the broadcast packet length is 200 b, and the total bandwidth is 1 MHz. In order to simplify the simulation complexity, the communication in the monitoring area takes Efs = 10 pJ / b / m2 according to the free space model, and the communication between the cluster head node and the base station is due to the long distance (> 100 m). .001 3 pJ / b / m4.

According to the different fusion rate of the second-layer cluster head to the second-layer cluster head data received by the second fusion, the simulation comparison is made here for complete non-fusion, 50% fusion, and complete fusion.

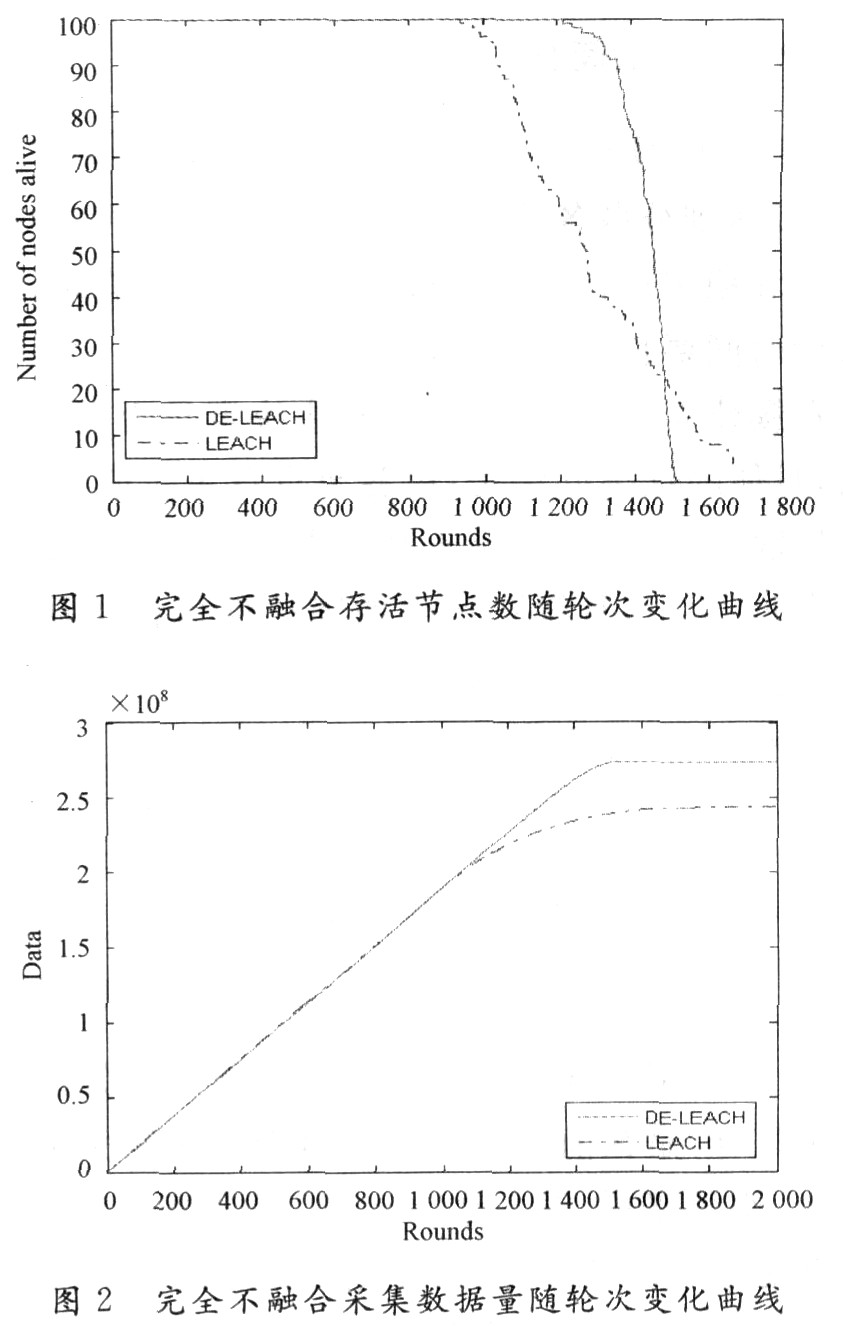

There is no fusion at all, that is, the data packets sent from the first layer of cluster heads form a long data packet without processing and sent to the base station. At this time, the second layer of cluster heads is equivalent to just completing the functions of data reception and forwarding. The simulation found that even in the case of complete non-convergence, the death time of the first node of DE-LEACH is 30% later than that of LEACH, and the death time of 50% node is 15% later (Figure 1). The total number of data acquisition bits DE-LEACH is 12% higher than LEACH (see Figure 2). Although the death time of the last node is earlier than LEACH, the survival period in the network is very concentrated, and the network has a large area of ​​monitoring blind spots for a short time. To ensure the continuity and integrity of data collection, redeploying nodes to the monitoring area will be more than the LEACH algorithm. Cost-effective.

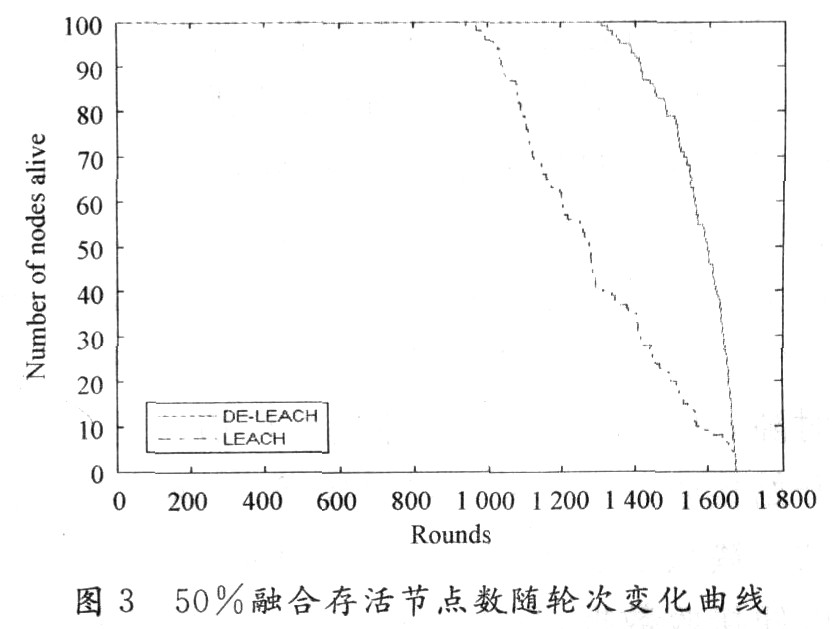

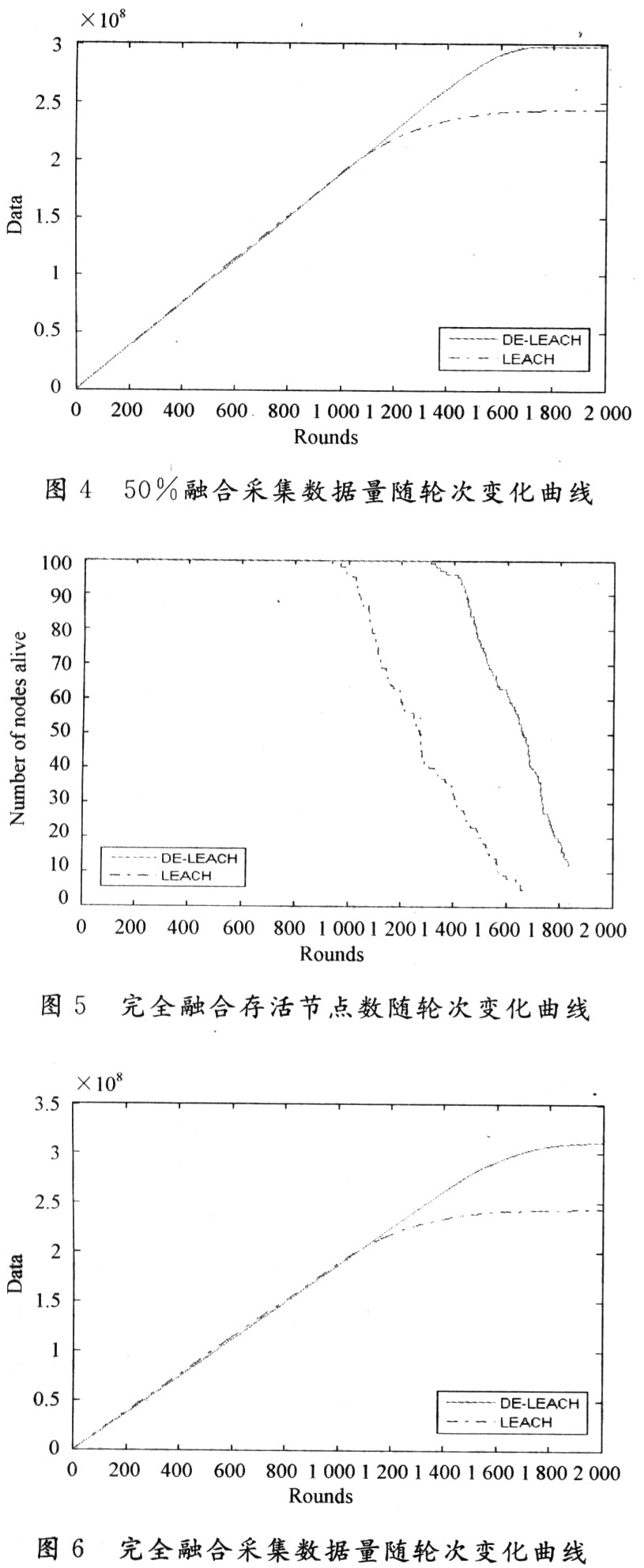

50% convergence, that is, compress half of the data packets sent from the first layer of cluster heads and send them to the base station. The data packets sent by each first-layer cluster head still contain redundant information that is not needed by the base station such as the header of the first-layer cluster head data packet, and can be further merged. The simulation found that after 50% fusion, DE-LEACH's first node died 40% later than LEACH, and 50% node died 25% later (Figure 3). The total number of data acquisition bits DE-LEACH is 22% higher than LEACH (Figure 4).

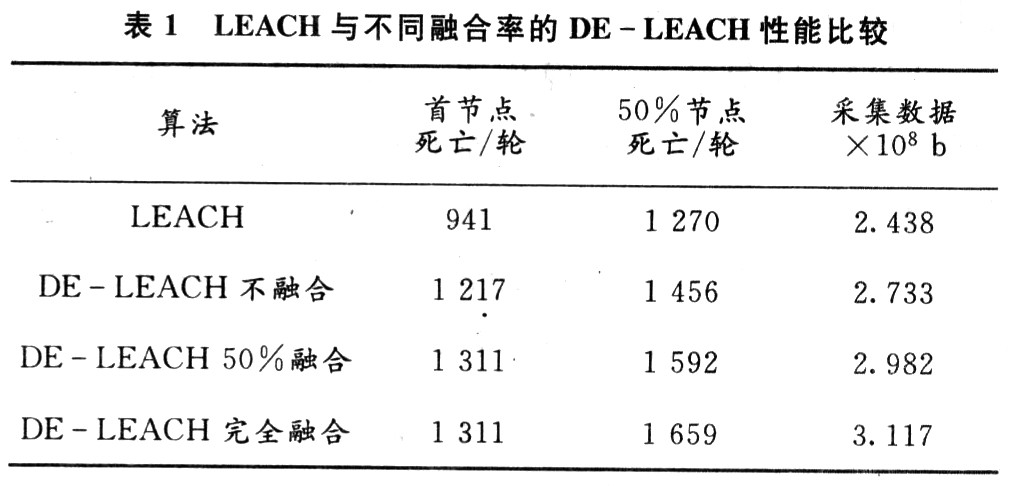

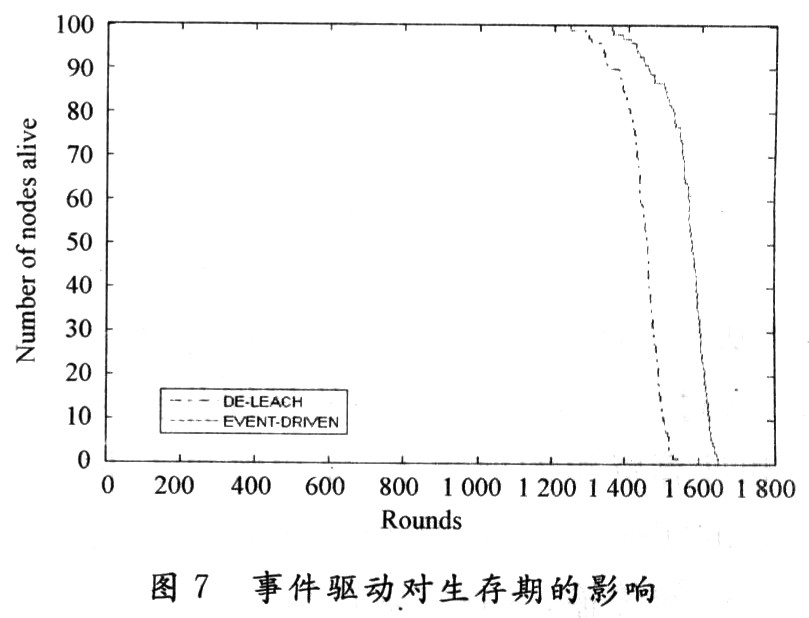

Fully integrated, that is, the data packet sent from the first layer cluster head is compressed into a 2 000 b data packet and sent to the base station. Due to the large fusion rate, not only data-level fusion, feature-level fusion, but also decision-level fusion are used. That is, the last data transmitted to the base station is no longer simple data, but the result obtained after a comprehensive analysis of the collected data by the cluster head node at the second layer. The simulation found that after complete integration, the death time of the first node of DE-LEACH is 40% later than that of LEACH, and the death time of 50% of the nodes is 30% later (Figure 5). The total number of data acquisition bits DE-LEACH is 28% higher than LEACH (see Figure 6).

It can be seen from the simulation comparison that the distance between the cluster head node and the base station and the remaining energy are comprehensively considered, and the DE-LEACH algorithm of the second layer cluster head is selected accordingly, and the performance is significantly improved compared with LEACH.

The advantages of the DE-LEAcH algorithm: the dead time of the first node is extended, the slope of the curve is significantly larger than that of LEACH, and the time interval between the first and the last node is shortened. Compared with LEACH, the dead time of the node is more concentrated, the occurrence of blind spots is shorter, and the sensor is redeployed Nodes are more cost-effective; the total data collection has increased significantly. Deficiencies of the DE-LEACH algorithm: Due to the addition of one-hop routing between the cluster head of the original LEACH algorithm and the base station, the network delay has increased. In addition, the computing power of the node should be improved. As far as simulation is concerned, in order to obtain a longer network survival time and higher data collection volume, it is necessary to increase the data fusion rate, which puts higher requirements on the data fusion ability of the nodes. In practical applications, comprehensive consideration should be given to the performance and cost requirements of the application.

3.2 Event-driven DE-LEACH lifetime analysis For the correlation of the data collected by the sensor nodes (such as temperature, pressure, etc.) in a short time, a threshold can be set when two adjacent collections When the data change exceeds this threshold, the node sends data to the cluster head, and retains the next data in the node's memory; if the change is less than the threshold, it will not be sent, and the previous data will be retained to prevent the data from gradual Way changes. The threshold value can be set as a percentage of the previous data collection or a specific value, depending on the specific situation. The simulation in Figure 7 sets the threshold to 10% of the previous data collected (completely unfused).

It can be seen from Figure 7 that after adding the event driving factor, the network survival time is extended by about 9%. In the simulation, a random number is generated for convenience of data collection, and there is no correlation. When the data in the actual application are correlated, the prolonged survival time will be more obvious. In addition, the size of the threshold value can be changed as needed, and it has strong adaptability. Disadvantages of adding event-driven: Since the data collected twice needs to be stored for comparison, the storage capacity of sensor nodes is increased.

4 Conclusion The routing protocol largely determines the overall performance of the network. Therefore, as one of the core technologies of wireless sensor networks, routing protocols have always been a research hotspot. The LEACH algorithm is a classic hierarchical routing protocol. It uses the cluster head rotation mechanism to effectively spread the energy consumption evenly to the entire network. Based on the LEACH algorithm, a two-layer improved LEACH algorithm DE-LEACH based on distance and energy to select the second layer cluster head is proposed, and the impact of event-driven on network lifetime is briefly analyzed. The simulation results show that the algorithm further averages the energy consumption in the network, effectively prolongs the network survival time, and improves the network data collection capability.

The implementation of the LEACH algorithm makes some assumptions. One of the points is that every node in the network will broadcast the entire network after being selected as the cluster head. This assumption will obviously increase the loss when the network coverage is large, so multi-layer multi-hop routing becomes inevitable in large-scale applications. select. This will also be one of the directions for future work.

Antenna For Automotive Product,Fm Transmitter Antenna,Radio Transmitter Antenna,Transmitting And Receiving Antenna

IHUA INDUSTRIES CO.,LTD. , https://www.ihua-inductor.com