After Apple and Google joined the AR war situation one after another, the fieryness of the technology was instantly raised by a high level. In the face of tens of millions of potential users, the developers also rushed to create an attractive immersive AR experience for the market. The magic that we have seen on video seems to be coming soon.

Indeed, we are closer to dreams than ever before, but in fact, we still need many years of research and development and design before the immersive AR enters the mainstream. Below, we will give an overview of the three key challenges facing AR.

Immersive field of view

In the same VR experience, different visual field effects are compared

Watching cool AR demos on YouTube is one thing, and experiencing AR in real life is another thing. Even today's most advanced portable AR headsets are not ideal in terms of field of view, even with VR devices.

Take Microsoft HoloLens for example, it is already the best AR headset that can be bought on the market, but the field of view is only poor 34 degrees, and even Google’s cheap VR product Cardboard has 60 degree vision. field.

For the AR, the field of view is very important, because to achieve a certain degree of immersion, the AR world must blend seamlessly with the real world. If you can't see the AR world in real time, you will unnaturally move your head to "scan" the surrounding environment, just like looking at the world through a telescope. In this way, the brain will not be able to use the intuitive mapping to see the AR world as part of the real world, and the so-called immersiveness will also vanish.

As a result, needless to say, immersive sense is not enough to become people's natural consciousness. It also means that this cannot be a natural human-computer interaction for the consumer and entertainment markets.

However, isn't there a Meta 2 AR eyeglass now? It has a 90 degree field of view. Isn't this okay?

Indeed, the Meta 2 is the most widely used AR head-mounted device on the market. It is almost comparable to today's VR products, but the Meta 2 is still very bulky, and it is impossible to shrink the optical system without sacrificing a certain field of view.

Meta 2 AR glasses

Meta 2's optical components are actually very simple. The huge “hat†on the helmet hides a screen similar to a smartphone. The screen angles down to the ground. In addition, the giant plastic goggles are silver-plated internally, which projects the screen image on the screen to the user's eyes. If you want to reduce the size of the headset, you have to reduce the screen and goggles at the same time, so that the field of view will also be reduced. For developers, Meta 2 is an absolute artifact, but if it is placed on the consumer market I am afraid consumers will find it hard to find.

ODG R9

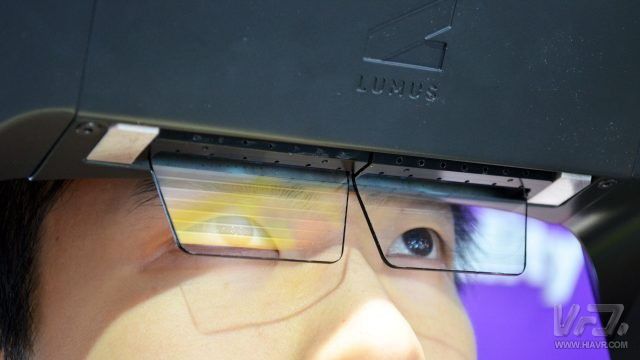

ODG is also using similar programs to make AR glasses, but their optical systems have been slimmed down, the field of view has dropped to 50 degrees, and a product called R-9 still costs as much as 1800 US dollars. From the point of view of the consumer market, this guy is not only unqualified but also unqualified. In contrast, Lumus using light-guided technology uses a 2 mm thick optical system to achieve a 55-degree field of view.

Lumus

Although the 50-degree field of view is quite good, it is still far from the 110-degree field of view of the top VR devices, and the consumer's pursuit of the field of view is endless. Oculus had previously stated that in order to achieve a true sense of immersion, the field of view must reach at least 90 degrees, so AR must turn over this mountain as soon as possible.

Understand different objectsBoth Apple's ARKit and Google's ARCore can bring you a new and beautiful AR experience, but due to the limited capabilities of smart phones, these two systems can only understand the "new world" on the plane, which is 99% of the current iOS. Why the AR App Plays on the Wall or on the Desktop?

Why do they have to be desktops or walls? Because they are easy to classify. The plane of the floor or wall is the same as the plane of the other floor and the other wall, so the system has the confidence to assume that this plane can be extended to all sides until it intersects with another plane.

Note that here I use "understand" instead of "sense" or "detect" because the system can "see" the shape of the object (except for the desktop and the wall). ) But they can't understand them.

For example, when you look at a mug, what you see is definitely not a shape. And you already know a lot about the cup, so how high is it?

1. You already know that the cup is very different from the plane it is on;

2. Even if you do not look at the cup, you know that it has room for liquids and other objects;

3. You know well that the liquid in the cup will not come out of the cup;

4. You know we can drink water from a cup;

5. You know very well that the cup is light and it is easily knocked down, causing the objects in the cup to be spilled.

......

It looks a bit silly, but I can continue to say it down. Here I list the above text mainly to tell everyone that we do not know the common sense computer. They can only see one shape, not a cup. The computer cannot get a complete view of the interior of the cup and maps out the complete shape. The computer cannot even assume that there is room inside the cup. At the same time, it does not know that the cup is an object that is independent of its plane. But you know it all, because in your opinion it is a cup.

For a computer, it is not enough to see only one shape. It must "understand" the cup. This is also the reason we have attached benchmark marks to objects in the AR demo for many years to achieve more detailed tracking and interaction.

So why is it so difficult for computers to "understand" the cup? The first big challenge is sorting. Cups have thousands of shapes, sizes, colors, and textures. Some cups also have special attributes and uses, so different cups will be suitable for different scenes and backgrounds.

If you want to analogy, the difficulty is equivalent to writing an algorithm that helps the computer understand all the above concepts, or write a few lines of code to explain the difference between the cup and the bowl to the computer.

Just solving a cup problem can bring such a huge challenge, so it's even more difficult to include all the thousands of objects in the world.

Today, smart phone-based AR can indeed be integrated into the surrounding environment, but it is difficult to interact with each other, which is why Apple and Google invariably choose the desktop and the wall. Existing systems can't make convincing interactions with our surroundings because the system can "see" floors and walls but cannot "understand" them.

Wanting our fantasy science fiction AR to come true (such as the AR glasses directly showing the temperature of the coffee or microwave oven remaining time), we need to have a deeper “understanding†of the world around us.

So how do we cross this mountain? There must be so-called "deep learning" in the answer. We must write hand-written classification algorithms for all types of objects, and we must know that even ordinary algorithms are super-complex tasks. However, we can train computer neural networks to design this neural network to have automatic adjustment programming as time progresses, and to have the ability to reliably detect the surrounding common items.

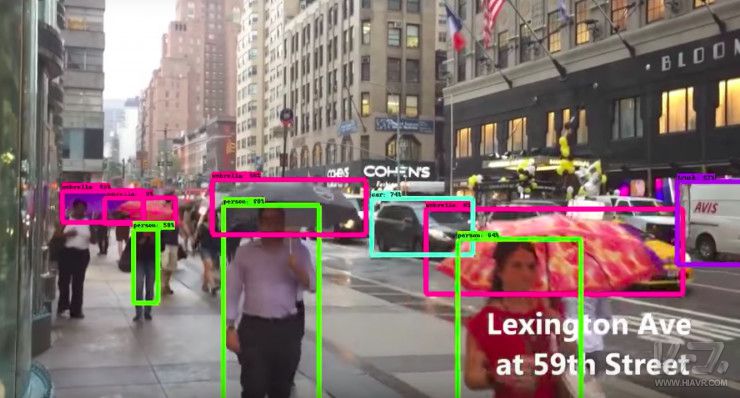

Some people in the industry have started to explore in this field, and they have made some breakthroughs. In the video below, the system has preliminary capabilities in detecting the differences between any human, umbrella, traffic light, and car.

Object recognition using the Tensorflow API

Next, we need to greatly expand the classification and then combine image-based detection with real-time environment mapping data collected from the AR tracking system. Once we can give the AR system the ability to understand the surrounding world, we can begin to address the design challenges of the AR experience.

Adaptive AR DesignThe third question is still to make an analogy. For network developers, reliable and practical design rules are the result of years of development. This is why web pages can adapt to different screen shapes. However, compared to Adaptive AR Design, this can only be a simple task because the latter needs to cover any environment that spans three dimensions.

This is not a simple issue. Even in the VR game design industry, designers are still at the basic stage of solving the problem. They can only design for different gaming site sizes. In general, VR game places are square or rectangular, and this space is exclusive to the player, and the trouble for AR to solve is much more complicated.

Imagine that even if you live in a neighbor, the home furniture and furnishings are completely different. Therefore, to find a way to create a convincing entertainment experience, designers need to polish for years. After all, this kind of entertainment experience needs a near-unlimited environmental demand. It needs to cover the space from the ground to the ceiling and scale up to millions of homes and buildings. Of course, the outdoor environment should not be forgotten.

You may think that creating a simple AR shooting game is not difficult, because the NPC in the game will be drilled out of a specific room. But don't forget that if you do not map the environment in advance, the AR system does not even know that there is another room in the house.

Assume that we have solved the object classification problem, that is, the system can already understand the objects around you on the human level. So how can developers use this breakthrough to build games?

Suppose we want to build a simple farm game where players can grow and use cups to water crops in augmented reality, but what if you don't have a cup around? Isn't this game playable? Of course not, developers are smart, they There are many alternative plans. Players can turn their hands into fists, cups, and tilt their fists.

Having settled these issues, we can start farming. U.S. developers hope that players will be able to prepare a house for ten rows of corn, but for European players, this space is too luxurious and there is not so much room for AR entertainment at home.

If necessary, this story can continue to be told, but overall, if we want to experience immersive AR that is not limited to floors and walls, we need to design adaptive AR games and applications that will make full use of us. The space and objects around. With some clever designs, we can control innumerable variables.

Adaptive AR design is the most difficult of the three challenges, but before the equipment that can meet the needs of the birth, we can first theoretical design.

Last year, people constantly put forward their opinions that AR and VR are equal in terms of maturity, but in reality, AR is a few years behind VR. AR is indeed an exciting product, but there is tremendous room for improvement from hardware to perception to design. Now that the AR is really catching up with a good time, this area is quite open. There are plenty of opportunities and space for newcomers to break through. If you have confidence, now is definitely a good time to enter the AR.

Ningbo AST Industry Co.,Ltd has 17 Years Experiences to produce the Recordable sound module, sound module, sound chip, voice module, recordable sound chip,Musical module and other electronic modul. we can assure you of competive price,high quality,prompt delivery and technology supporting.

1. Sound Module Application:

Sound Module is one kind of electronic product which mainly applied for Greeting cards,Christmas cards, promotion gifts, kids books, newspapers, magazines or other products which have sounds.

2. Sound Module Classification:

We can produce different sound modules which have different functions:

A. Pre-record Sound Module ----The clients will provide their sound files to us as MP3 or Wav Format,Then we programme them into the Sound module,it will play the customized message.

The sound file can be from 1second to 480seconds

|

|

B. Recordable Module ---also called Self-recording sound chip,we can record our own messages and playback them. Of course,the message can be erased and re-recorded.

Recording time:6seconds,10seconds,20seconds,30seconds,60seconds,90seconds,120seconds,180seconds etc

|

C. Melody module --Which can play the Melody of the Happy Birthday,Merry Christmas,Slient Night and so on.

D. USB MP3 Module ---We Can download the songs,musics,sentences,voice,advertising language from PC directly. Which support SD Cards,TF Cards,U Disk and FM.LCD Display also can be made

E. Programmable Voice Module --Also can be called DIY Sound Chip,We provide the software and Programable Machine and the Clients can be programmed them at their office,factory or Home. Which is much suitable to small order quantity,but Prompt delivery order.

F. Waterproof Music Module --which usually are used by Children Cloths,Kids'Bib ,attract the children's attention or Sing the children's songs.

3. Sound Quality:Clearly and Loudly ( 16Khz Sampling Rate)

4. Activated way:

1) Pull String -- Pull the string to activate the sound.

2) Slide tongue,usually was used by greeting cards,open the cards,it will play the message automatically.

3) Push buttons--press the button to play the message/audio/music

4) Light sensor,usually was used by Gift box,newspaper. Open the Box or newspaper to play the message

5) vibration sensor,Move or shake it to play the customized audio

6) shadow sensor,if somebody walks front it,it will play the message.

7) Motion sensor,usually was used by Pop Display, advertising board and so on.when somebody walks front it,it will play the message.

8) PIR (Infrared sensor),usually was used by Pop Display, advertising board and so on.when somebody walks front it,it will play the message.

5. Long Lifespan

6. Speaker:21mm,27mm,29mm,36mm,40mm,57mm etc. Plastic or Metal

7. Battery:AA, AAA, AG13, AG10, AG3 and so on environmental

8. PCB available: customize size or our existed standard size

9. PCB:Customized Size (OEM or ODM) or our existed standard size

10. Certification:CE ,Rohs

11. Export to: USA, UK,Canada,Germany,

Turkey,Russia,Poland,Switzerland,Netherland ,Frence Hungary

,Australia,New Zealand, Brazil, Columbia,Argentina,Thainland,Singapore ,

Malaysia and so on

Sound Module, Recordable Sound Chip, Recordable Sound Module

AST Industry Co.,LTD , https://www.astsoundchip.com